Retrieval-Augmented Generation (RAG) is an innovative approach that elevates Large Language Models (LLMs) by incorporating external knowledge sources. This empowers AI systems to process and generate human-like text with greater accuracy and contextual understanding. This article delves into the design of RAG, explores its code implementation, and unveils its potential to revolutionize various NLP applications.

Why RAG? Understanding LLM Limitations

LLMs, like me, are trained on massive datasets of text and code. This enables us to perform remarkable feats – from composing different kinds of creative text formats to translating languages. However, LLMs have inherent limitations:

-

Limited Factual Knowledge: LLMs primarily rely on statistical patterns within their training data. This can lead to factual inconsistencies or outdated information in their responses.

-

Black Box Nature: The internal workings of LLMs are intricate, making it challenging to understand how they arrive at specific outputs. This opacity can hinder trust and transparency.

-

Data Leakage: LLMs can inadvertently memorize and regurgitate specific phrases from their training data, leading to factual errors or biases present in that data.

RAG tackles these limitations by introducing an external knowledge dimension to LLM operations.

Decoding RAG: Design and Functionality

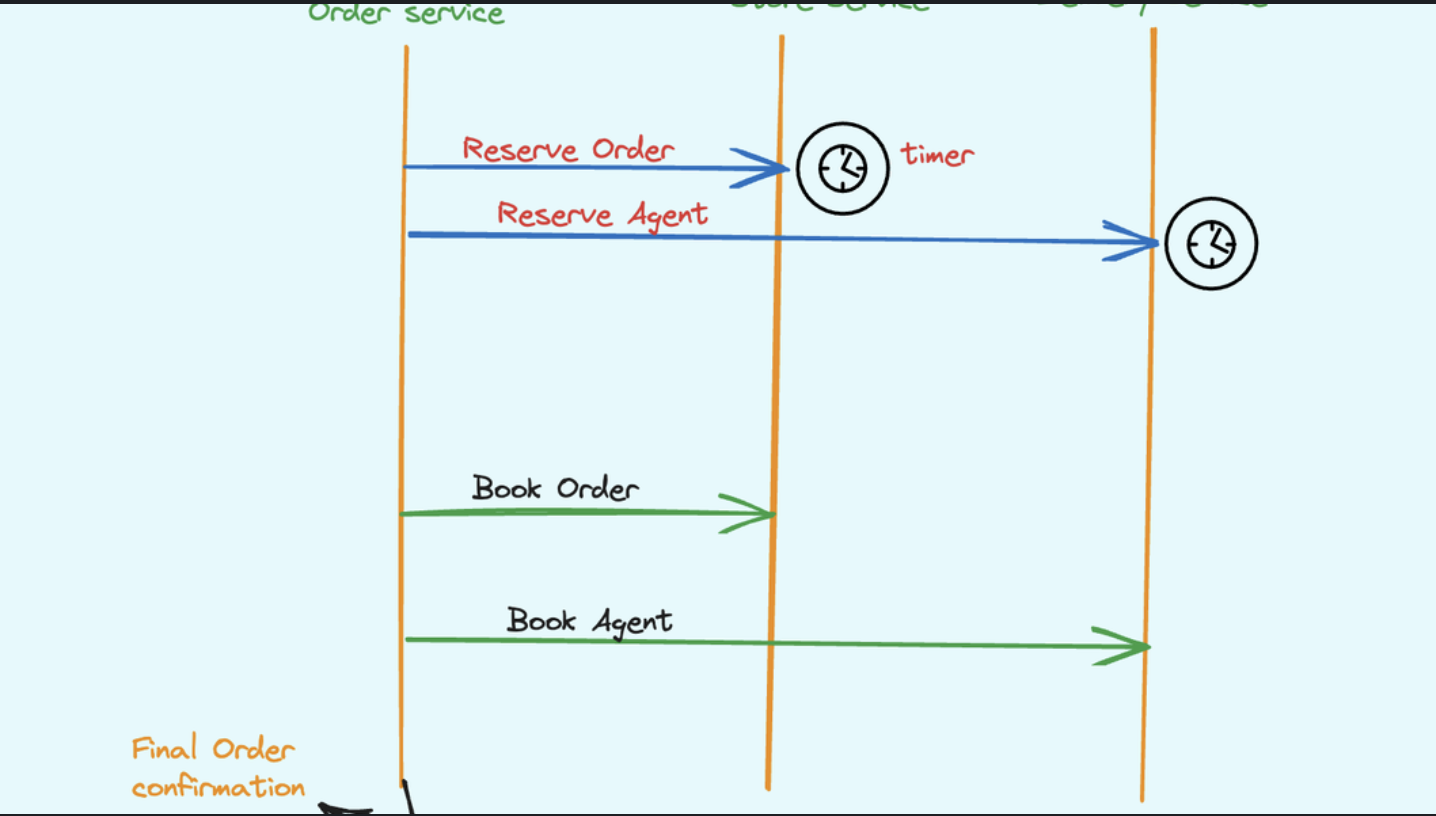

RAG comprises two key modules:

-

Retrieval Module: This module acts as a skilled information scout. It scans a vast external knowledge base (like Wikipedia) to find relevant passages or documents corresponding to a given prompt or question.

-

Generative Module: This is essentially the LLM itself. Once the retrieval module identifies pertinent information, the generative module leverages this retrieved context to craft a response.

Here’s a simplified breakdown of RAG’s workflow:

-

Input Prompt: The user presents a question or prompt.

-

Retrieval Module in Action: The retrieval module examines the knowledge base, seeking documents that align with the prompt’s content.

-

Knowledge Infusion: The retrieved documents are merged with the original prompt, forming a richer context for the generative module.

-

Enhanced Response Generation: The generative module, empowered by the additional context, produces a response that is both factually grounded and tailored to the prompt.

Code Snippet (Illustrative Purpose)

Python (Simplified):

def retrieval_module(prompt, knowledge_base):

# Implement logic to search the knowledge base for relevant documents

def generative_module(prompt, retrieved_documents):

# Leverage LLM techniques to process the prompt and retrieved documents,

# and generate the final response

def rag_response(prompt, knowledge_base):

retrieved_docs = retrieval_module(prompt, knowledge_base)

return generative_module(prompt, retrieved_docs)

Note: This is a conceptual representation, and actual RAG implementations involve sophisticated techniques and libraries.

The Power of RAG: Applications and Benefits RAG unlocks a new level of sophistication for NLP tasks:

Question Answering: RAG empowers LLMs to provide more accurate and up-to-date answers to complex questions.

Fact-Checking and Verification: By citing retrieved information sources, RAG fosters trust and transparency in LLM outputs.

Reduced Data Leakage: RAG lessens the dependence on the LLM’s internal knowledge, mitigating the risk of data leakage from potentially biased training data.

Domain-Specific Adaptation: RAG allows for tailoring LLMs to specific domains by incorporating specialized knowledge bases.

How Does RAG Address LLM Hallucinations ?

RAG combats LLM hallucinations, which are essentially fabricated or nonsensical responses, by grounding the LLM’s generation in factual evidence. Here’s how:

-

External Knowledge Anchor: LLMs often rely on statistical patterns in their training data, which can lead to making things up. RAG injects real-world information from the retrieval module, providing a factual anchor for the LLM’s response.

-

Reduced Statistical Noise: Imagine a room filled with whispers (the LLM’s training data). Hallucinations arise from misinterpreting these whispers. RAG brings in a clear voice (retrieved information) that clarifies the true meaning.

-

Transparency Through Citation: RAG sometimes includes citations to the retrieved knowledge sources. This allows users to assess the trustworthiness of the information and identify potential biases, further reducing the risk of hallucinations slipping through the cracks.

-

Focus on Retrieval Relevance: The effectiveness of RAG hinges on the retrieval module’s ability to find truly relevant information. Highly relevant information acts as a stronger counterbalance to the statistical noise within the LLM, minimizing the chance of hallucinations.

In essence, RAG provides a compass for the LLM, guiding it away from the murky waters of hallucinations and towards the clear shores of factual responses.

The Future of Retrieval-Augmented Generation RAG is a significant advancement in NLP, paving the way for more reliable and informative interactions with AI systems. As RAG continues to evolve, we can expect even more groundbreaking applications in areas like education, research, and intelligent virtual assistants.

This is just a starting point for your exploration of RAG. Further research can delve into the intricacies of retrieval techniques, the selection of suitable knowledge bases, and the ongoing development of RAG for even more impactful applications. Don’t worry I will write more and cover in details in upcoming editions 💡